Context aware human activity prediction in videos using Hand-Centric features and Dynamic programming based prediction algorithm

Abstract

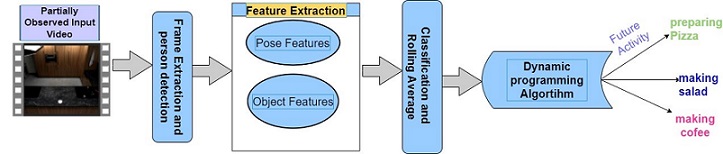

Activity prediction in videos deals with predicting human activity before it is fully observed. This work presents a context-aware activity prediction approach that can predict long-duration complex human activities from partially observed video. Here, we consider human poses and interacting objects as a context for activity prediction. The major challenges of context-aware activity predictions are to consider different interacting objects and to differentiate visually similar activity classes, such as cutting a tomato and cutting an apple. This article explores the use of hand-centric features for predicting human activity, consisting of various human-object interactions. A Dynamic Programming Based Activity Prediction Algorithm (DPAPA) is proposed for finding the future activity label based on observed actions. The proposed DPAPA algorithm do not employ Markovian dependencies or Hierarchical representation of activities, and hence is well suited for predicting human activities which are often Non-Markovian and Non-hierarchical. We evaluate results on MPPI Cooking activity dataset which consist of complex and long-duration activities.

ISSN 2321-4635

ISSN 2321-4635